Student Registration

Streamlining a high-stress academic moment for thousands of students

A seamless registration process isn’t just a nice to have, it’s critical to student success. This work directly reduced stress during peak registration and laid the groundwork for a more intuitive registration ecosystem across our platform.

My Role

Lead UX Designer

The Problem

Course registration is a high-pressure, time-sensitive experience especially for undergraduates trying to secure popular classes. Students rely heavily on "Saved Schedules" as a fast way to register for multiple courses at once, but the tool was unintuitive, inefficient, and prone to errors.

Goal

Simplify the scheduling experience by enabling students to easily browse, plan, and register for courses with fewer errors and less stress.

Hypothesis

If we improved the usability and efficiency of the Saved Schedule flow, students would be able to create better, conflict-free schedules faster—leading to smoother registrations and fewer support requests.

What We Did

Conducted discovery research to map out current student workflows and identify key friction points

Rapidly prototyped new scheduling interactions and validated them through usability testing

Designed a new flow that reduced cognitive load and clarified system feedback

Launched an early adopter program with 8 institutions to gather in-context feedback and iterate

Partnered with engineering to ensure a smooth, scalable implementation across teams

Outcomes

Reduced click count from 21 to 9 to complete a saved schedule registration

+20% increase in students rating the experience as “very easy to use”

Scaled the feature from 1 to 4 scrum teams based on positive adoption and roadmap expansion

Created more bandwidth for faculty and advisors, who reported fewer support issues

Flow Map

Outline of a typical instruction period.

Early Insights

In order to understand the problem, we first needed to understand the current experiences and the user needs. I organized a an analysis of the current experience and conducted user interviews with students and faculty.

Current Experience

Flow for adding a course to an existing saved schedule.

Initiating Screen

Browse Courses

Course Details

Methodology: Heuristic Evaluation, User Interviews, & Site Visits

Choose Times

Summary

Heuristic Evaluation

The Goal

Expose areas of improvement . UX researchers and designers were asked to review tasks independently and come together and discuss individual ratings focused on rating areas of friction across tasks. This gave us an initial baseline to measure improvement over time.

Early insights from students

“It is difficult to visual how courses line up and to avoid time conflicts.”

“Navigating was clunky. It would be helpful to see course options without having to go back to the main list as if you are starting over.”

“I want to easily remove or swap a course sections.”

The faculty perspective

What advisors told us:

Unfortunately, when registration is too difficult Students give up.

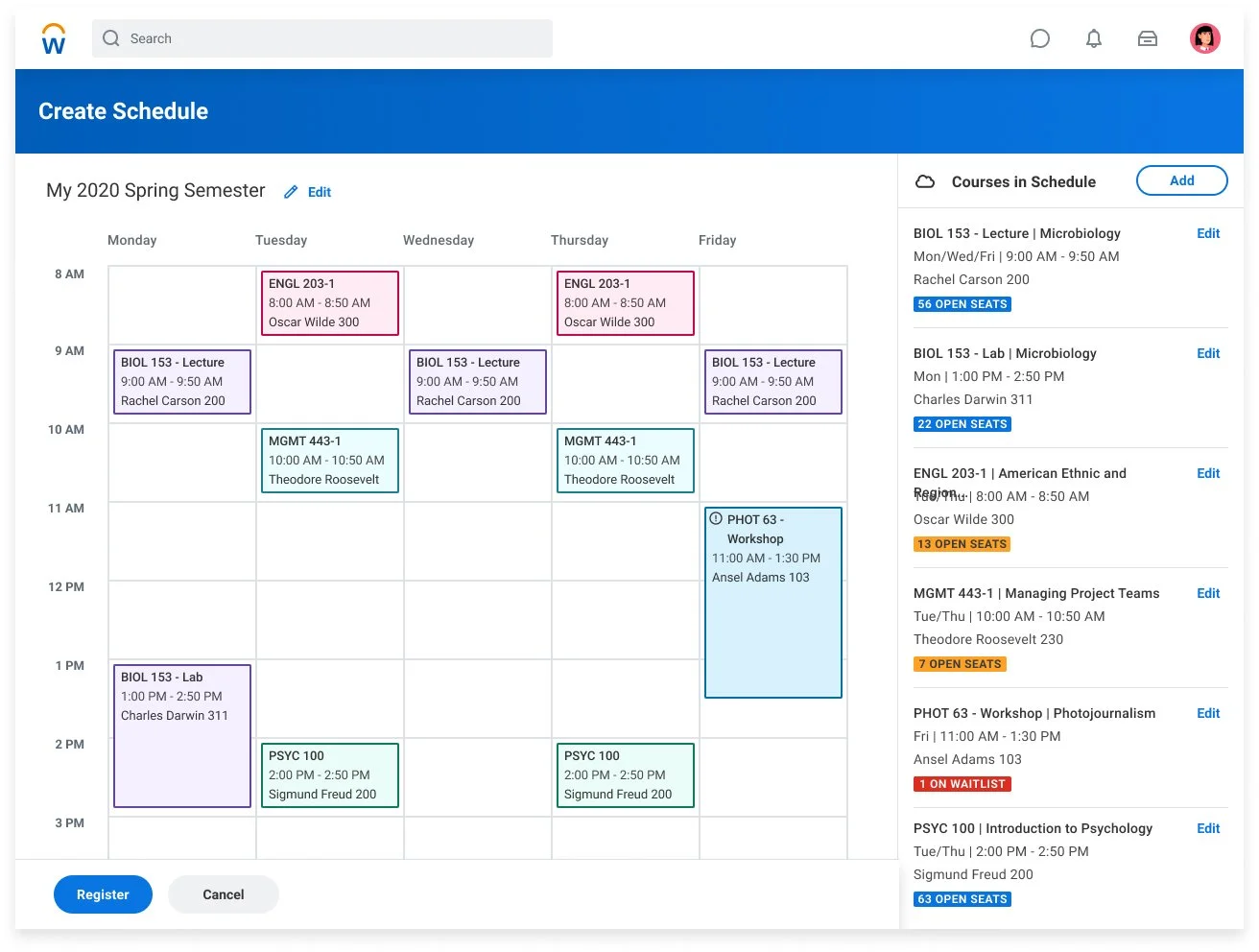

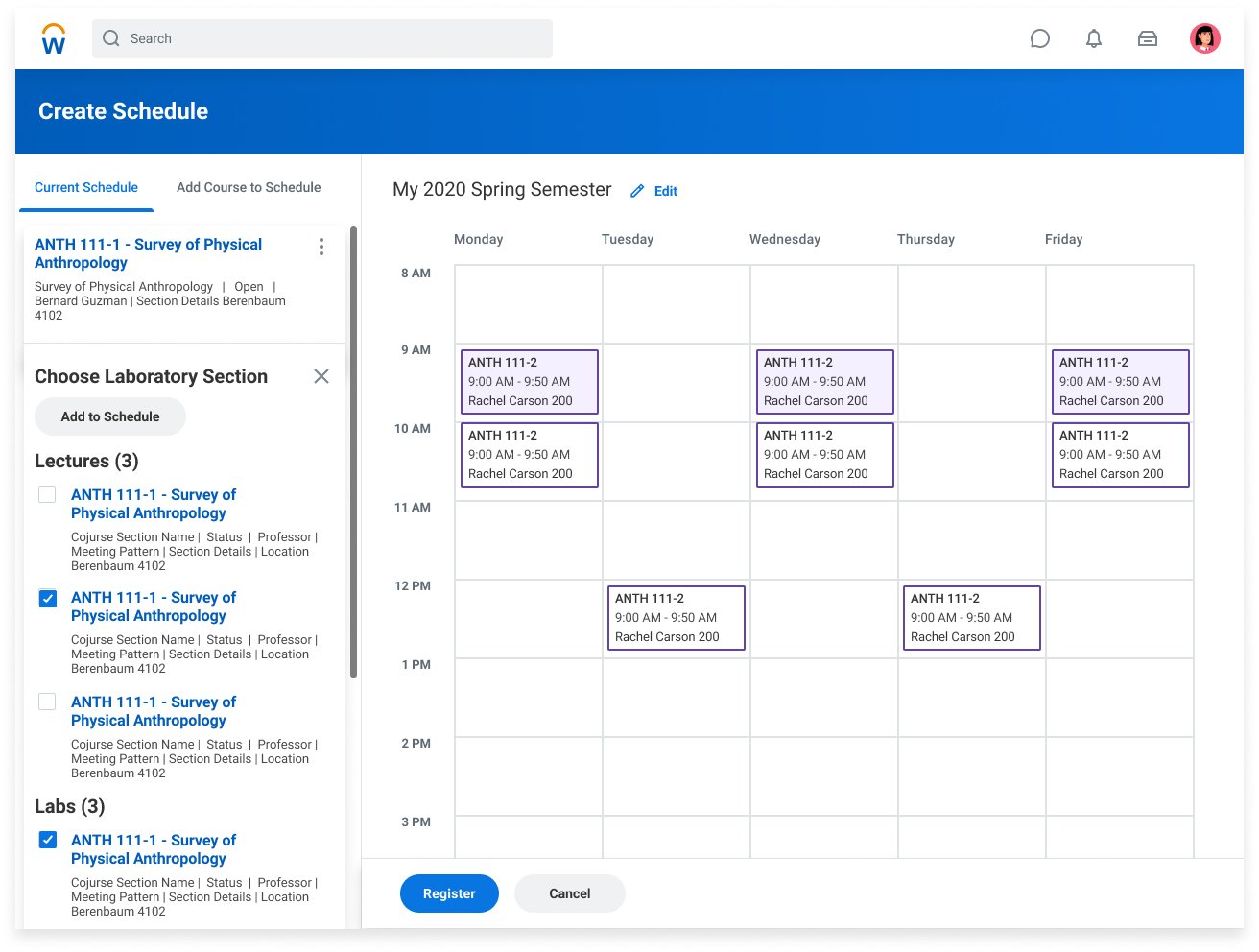

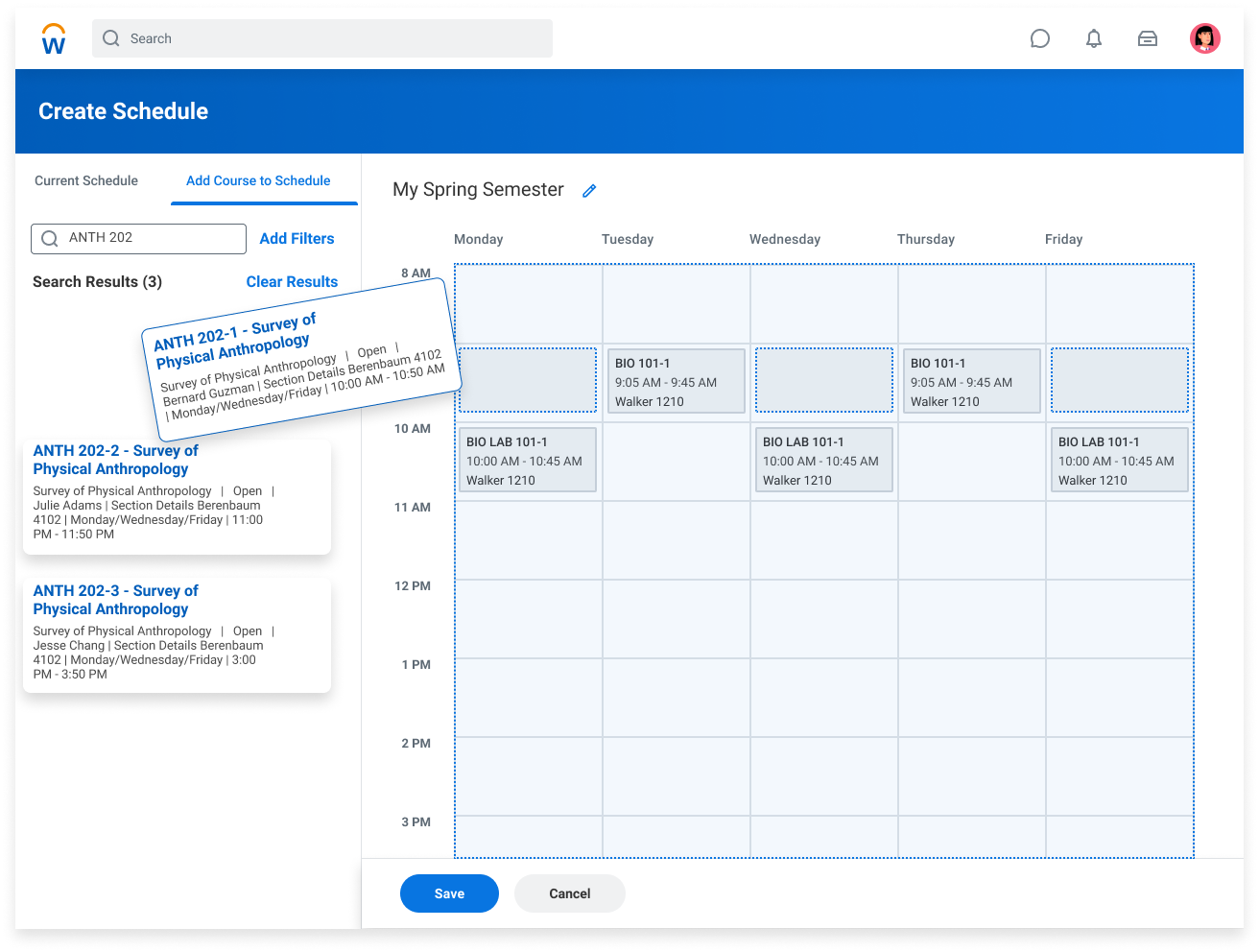

Design Concepts

Low Fidelity mocks and wireframing

The Goal

Use design as a tool to communicate with the team and imagine a direction. Share with the product managers, developers, and QA to gain early alignment.

-

The UX + Registration team aims to enhance students plan their classes to make a saved schedule and register.

-

Inventory + reflection on the current tools used by students

Interview to review prototype of the design proposal

-

How are students expecting to interact with their courses in a calendar?

Is the current design proposal easy and intuitive to interact with? Specifically:

Searching and filtering

Editing

Section dependent registration

-

We learned that the bespoke visual calendar exceeds expectations for student software. In addition, we learned that there are some improvements to make to the usability of the task

User Research

This video compilation captures encouraging feedback from users interviewed during testing.

Refine Prototype and Design

After analyzing findings from research, a polished prototype was created and designs were specced out for development.

Measuring Our Impact

As development completes we want to ensure continuous improvement and iterations on this experiences

Direct, qualitative feedback from key stakeholders, helping assess how well the new design meets business needs.

Design Partner Groups

Offer a first look at the experience, revealing any issues before a full rollout.

Early Adopter Groups

Using tools to measure feature adoption, engagement, and task completion rate.

Tracking Usage