Student Financial Aid

Using Quantitive Evaluation to Drive Decision Making

Project Background

This project aimed to improve the critical experience of students accepting financial aid awards. To achieve this, I incorporated quantitative analysis of click paths and task times, using these insights to uncover barriers and inform a more intuitive design.

About the experiment

The purpose of this experiment is to

Evaluate whether or not our proposed designs take us in a better direction in core usability

Ensure that changes we make don't negatively impact the following key metrics

Time on task

Completion rate

Overall usability

Success rate

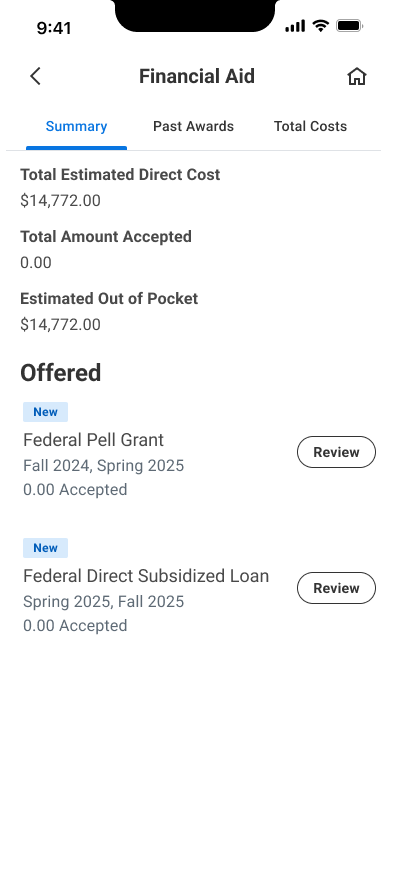

Proposed Solution

Method

The data was analyzed for statistically significant differences by task type using axure prototypes.

Time on Task: The amount of time from when the prototype opened to when it closed. The prototype closed automatically once the task was completed.

Usability: A 0 - 100 score computed from responses to two survey items:

“The feature was easy to use.” (1 - 7 Agreement Scale)

“The feature did what I needed it to do for the scenario.” (1 - 7 Agreement Scale)

Findability: A 1 - 7 score from responses to a single survey item:

“How difficult or easy was it to find what you were looking for to complete the task?” (1 - 7 Difficulty Scale)

Results

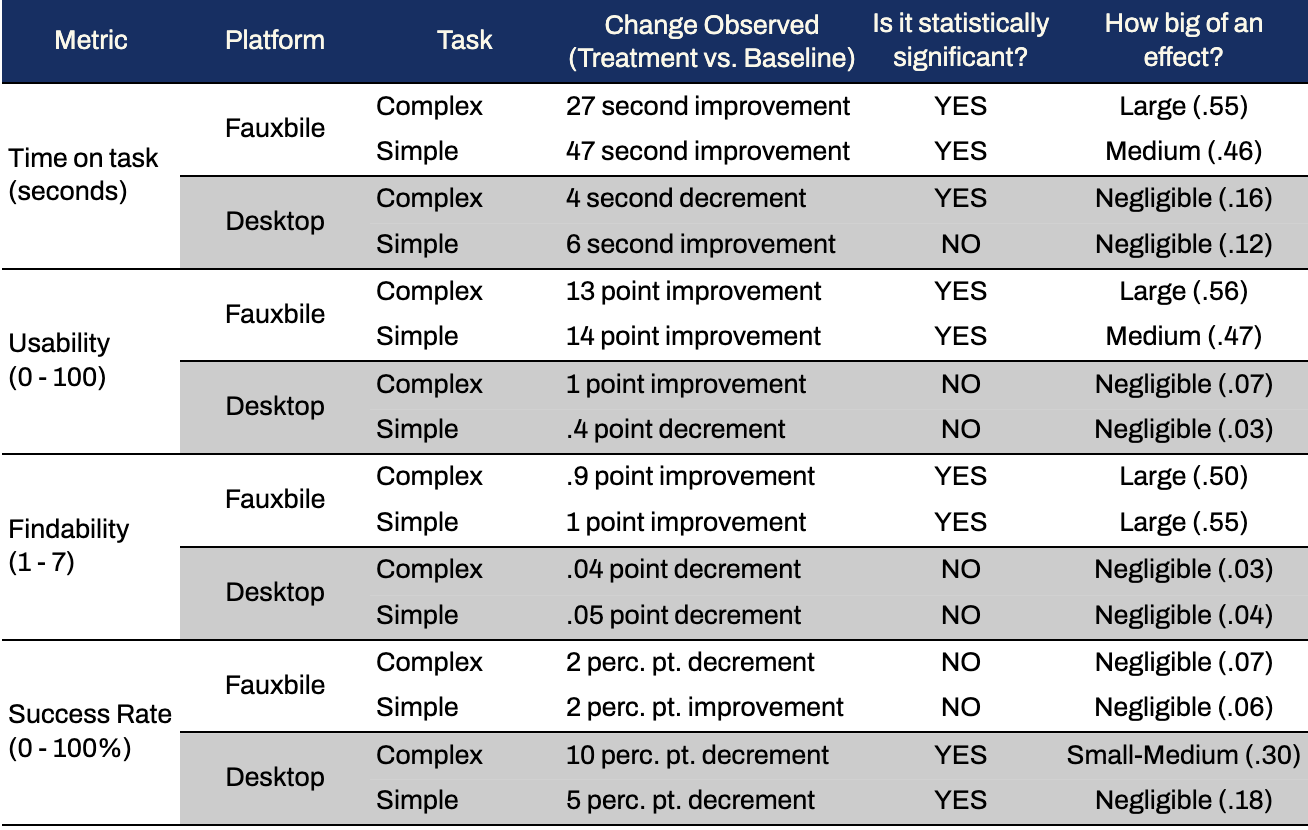

The proposed design performed much better than the baseline design. Time on Task, Usability, and Findability were all significantly better for the new treatment on with medium to large effect sizes.

Takeaways

Utilizing quantitative research methods helps to identify which designs will move the needle. Quantitative research can eliminate the guesswork in design decisions, and provide clear, data-driven insights before coding begins.